Beautiful Info About Why Use Glm Instead Of Ols Series In Chart

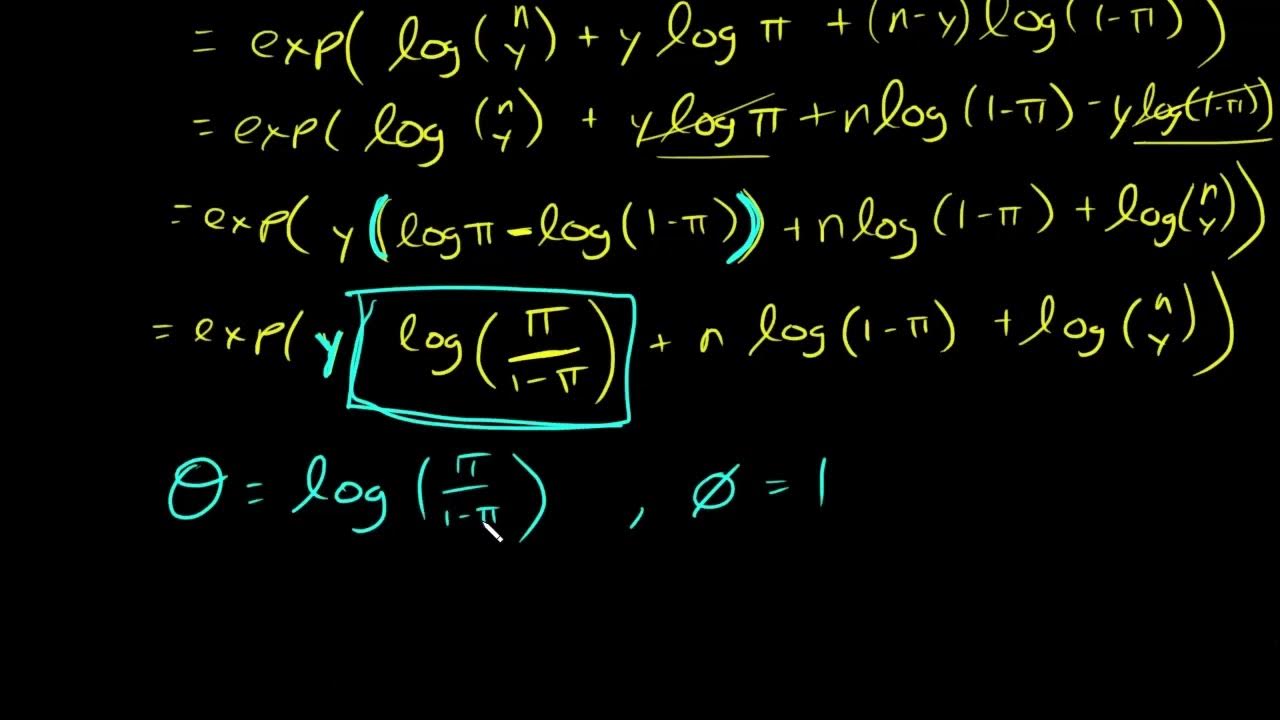

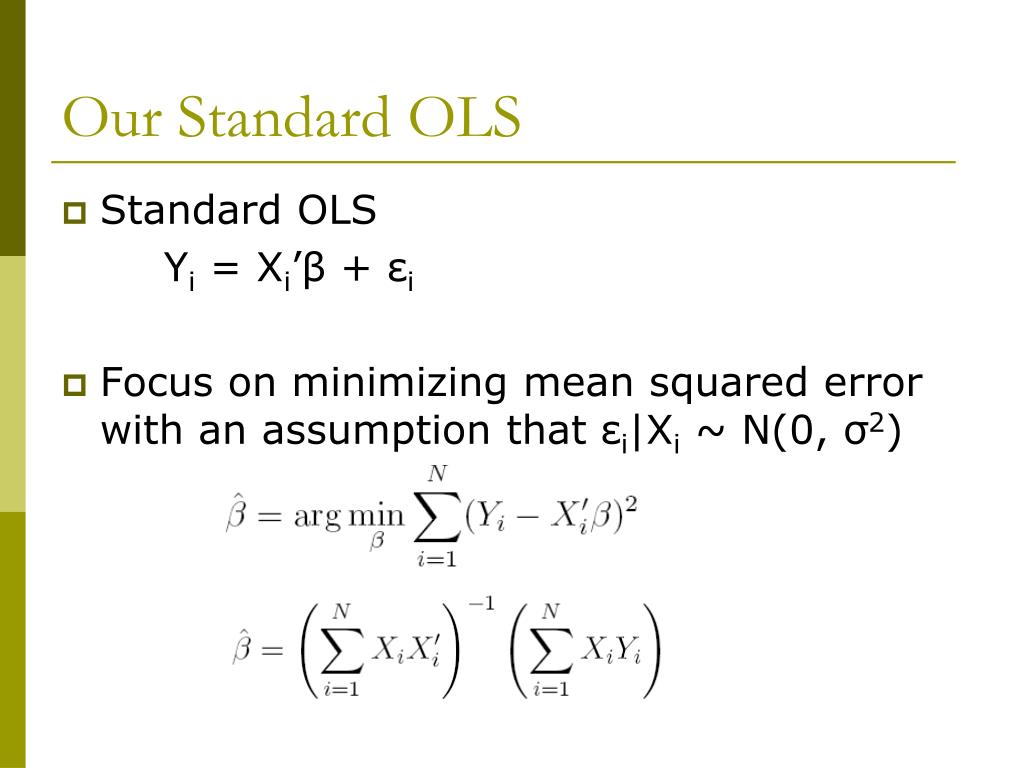

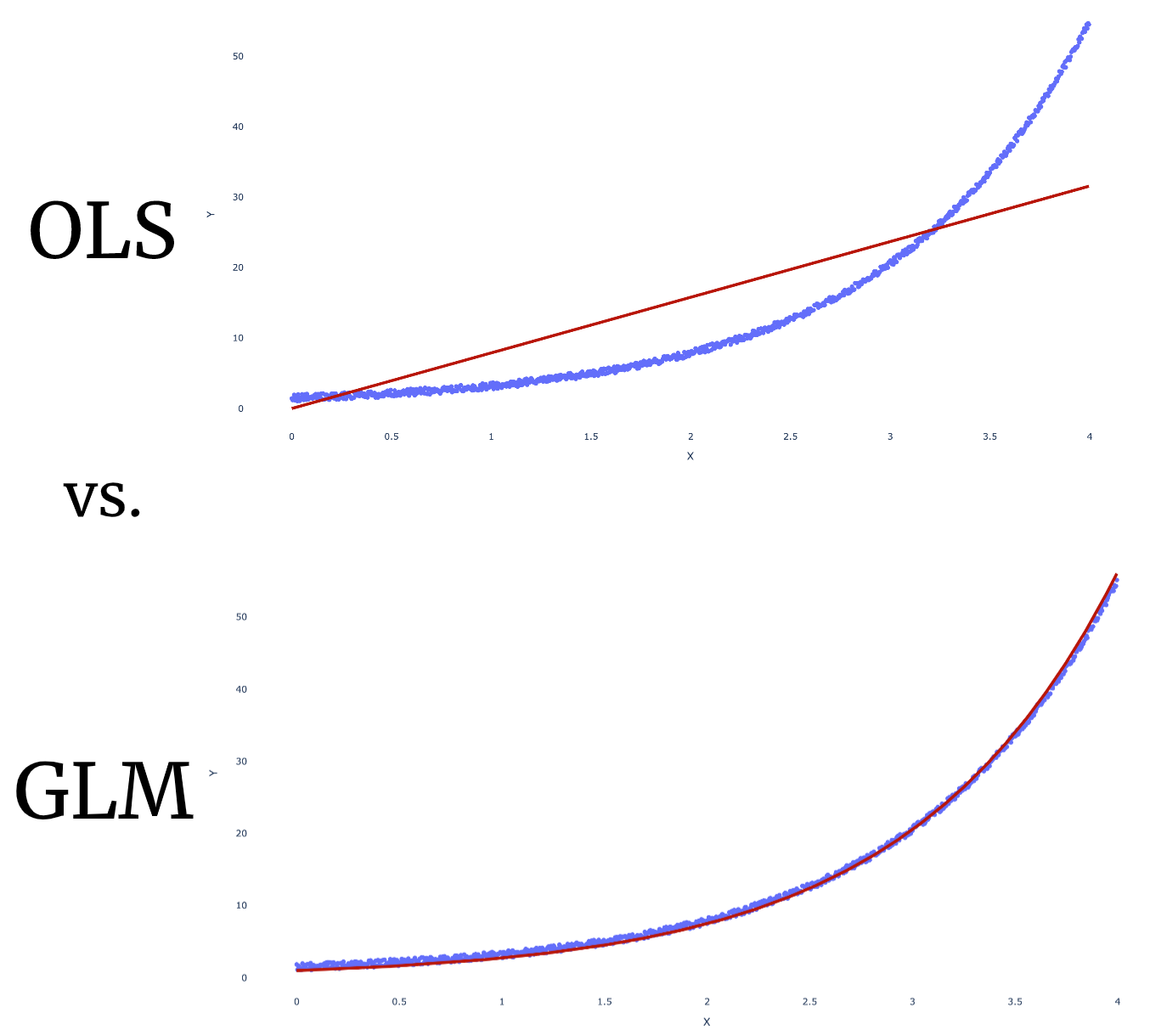

One common question is why one would choose to use a glm with, for example, a log link instead of estimating via ols the regression model:

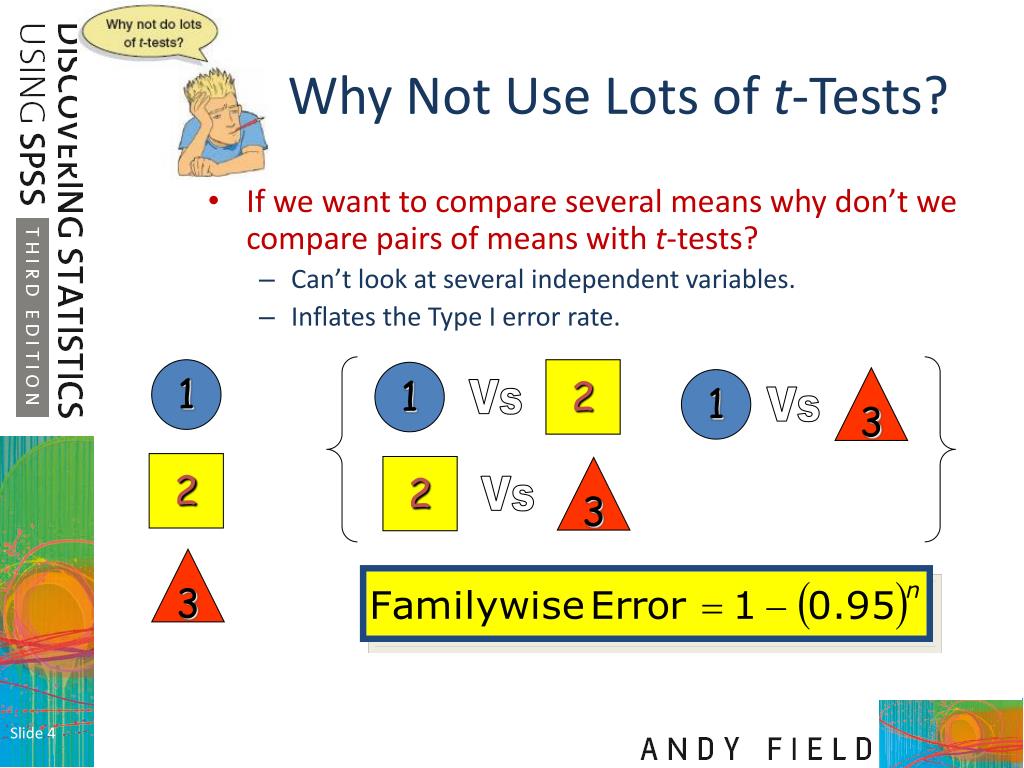

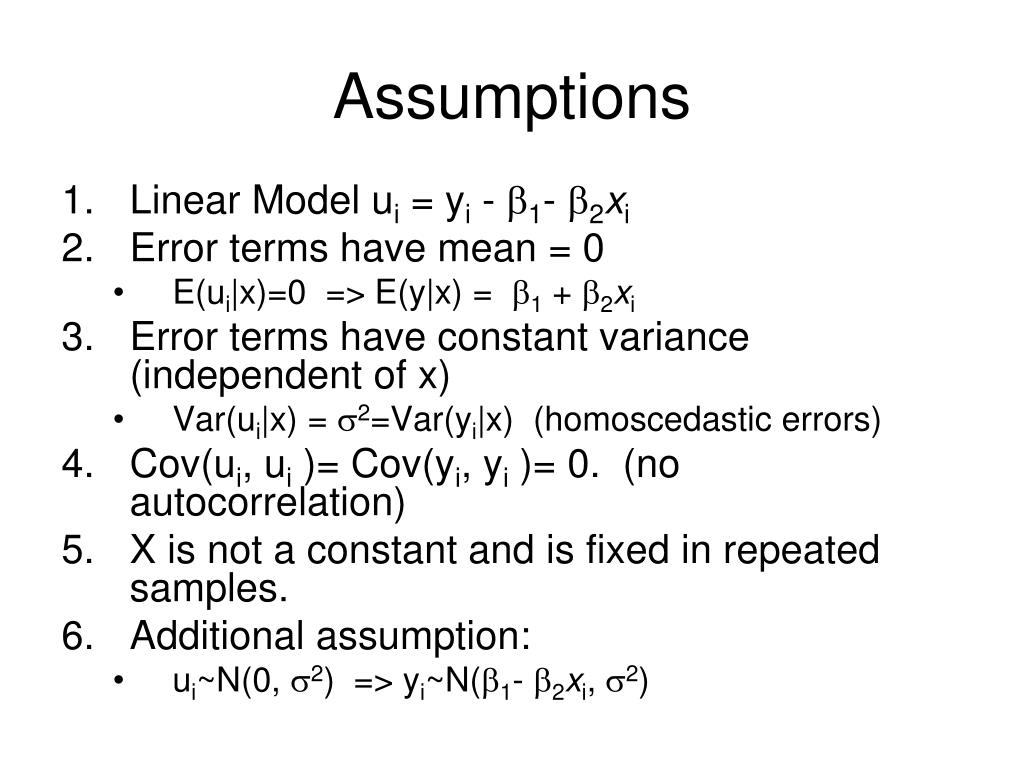

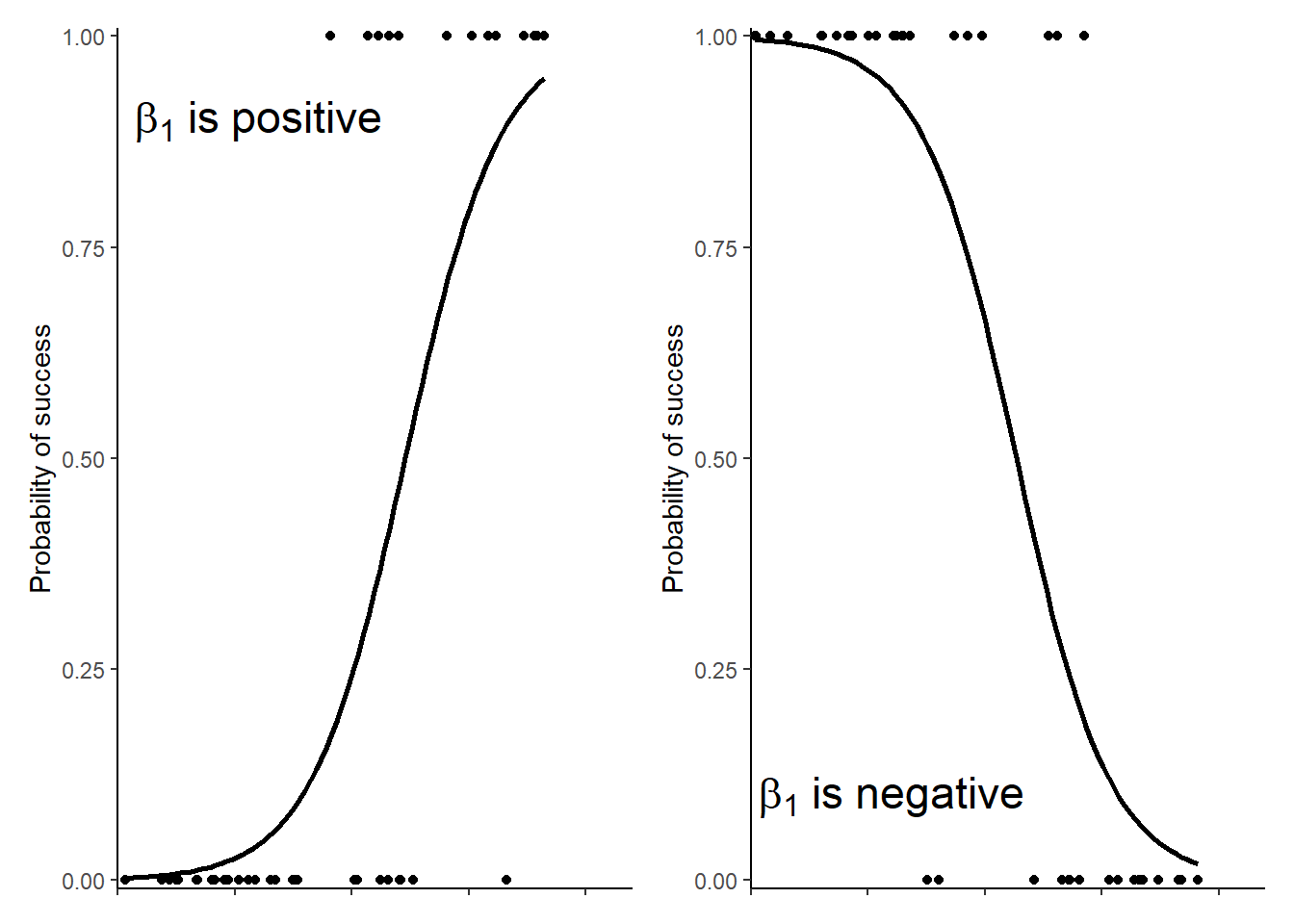

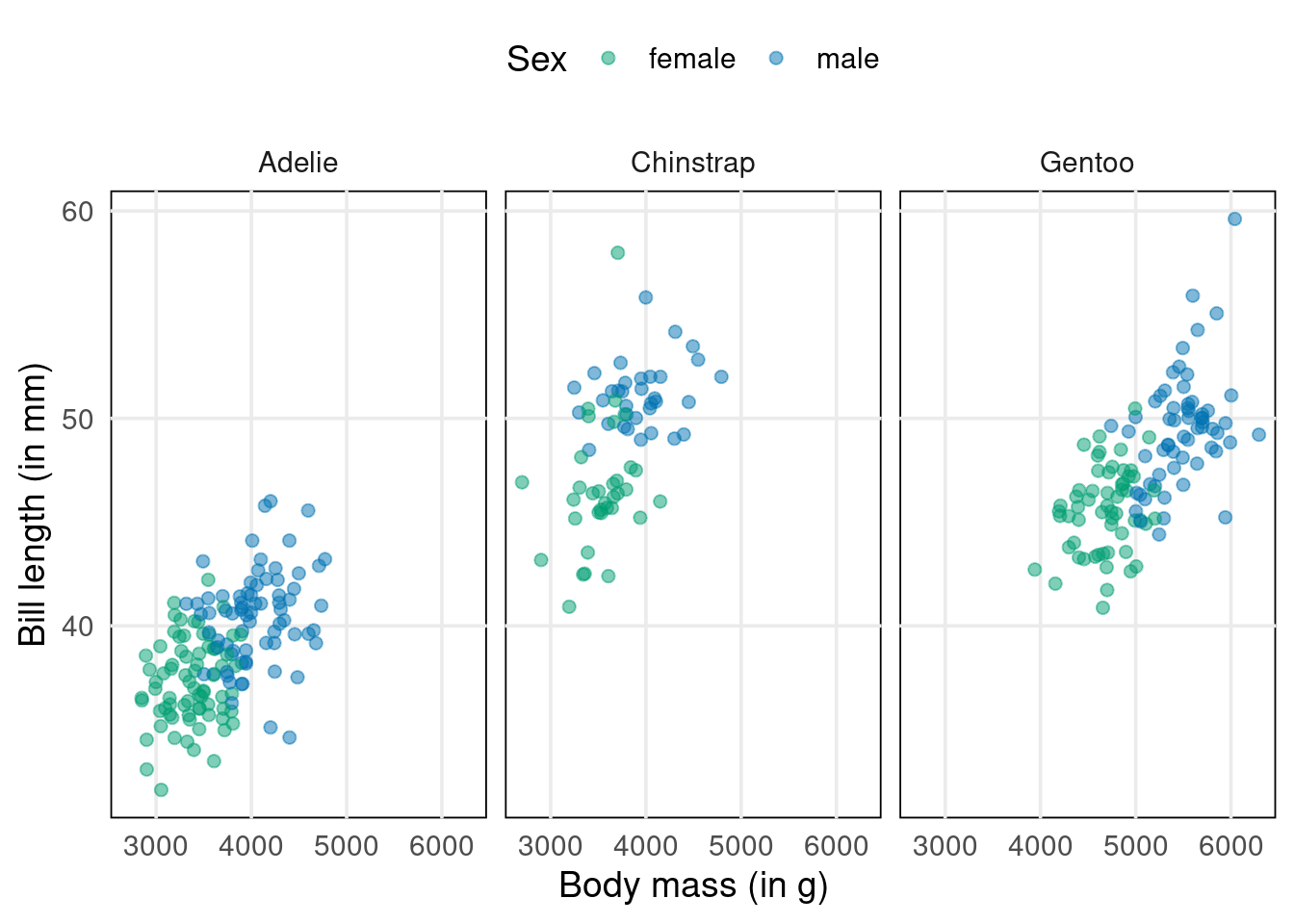

Why use glm instead of ols. In statistics, generalized least squares (gls) is a method used to estimate the unknown parameters in a linear regression model. Classical linear regression (clr) models, colloquially referred to. On the contrary to linear regression model, glms do not require a linear relationship between the expectation value and the predictors and do not assume normal distribution.

Glms give you a common way to specify and train the following classes of models using a common procedure: A lot of sources that i've seen contain the statement that glm allow us to build. However, the glm() function can also be used to fit more complex.

In the context of generalized linear models (glms), ols is viewed as a special case of glm. A glm is a more general version of a linear model: Glm usually try to extract linearity between input variables and then avoid overfitting of.

The linear model is a special case of a gaussian glm with the identity link. It can’t do ordinal regression or multinomial logistic regression, but i think that is mostly just a limitation of the program, as these are considered glms too. One of the things which makes econometrics unique is the use of the generalized method of moments technique.

Of course you need to check. Supply instead a decreasing sequence of lambda values. Lm fits models of the form:

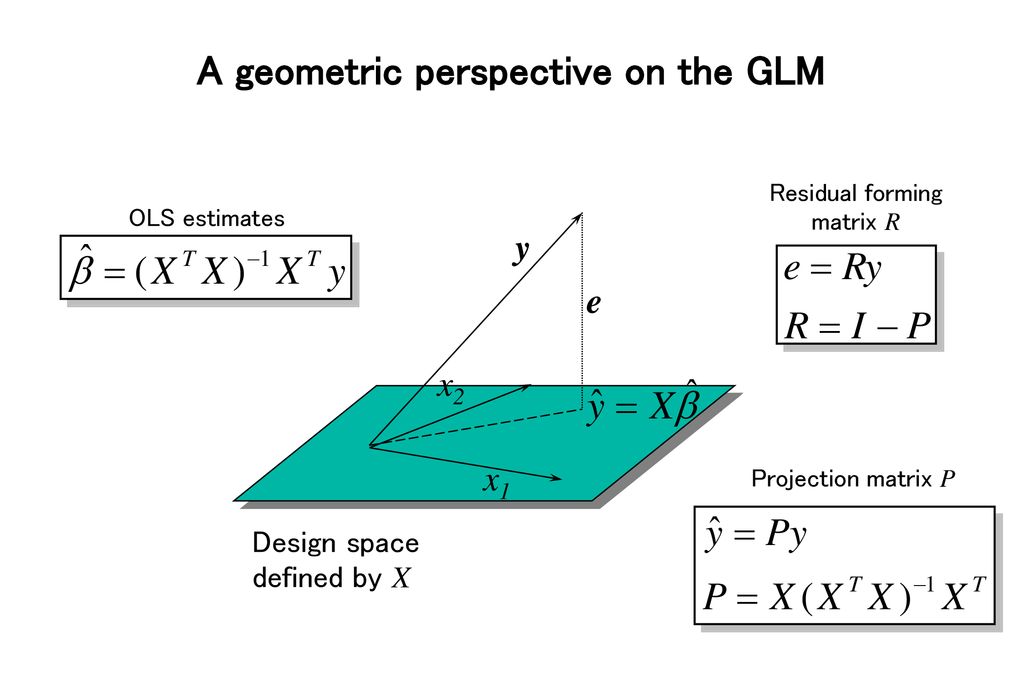

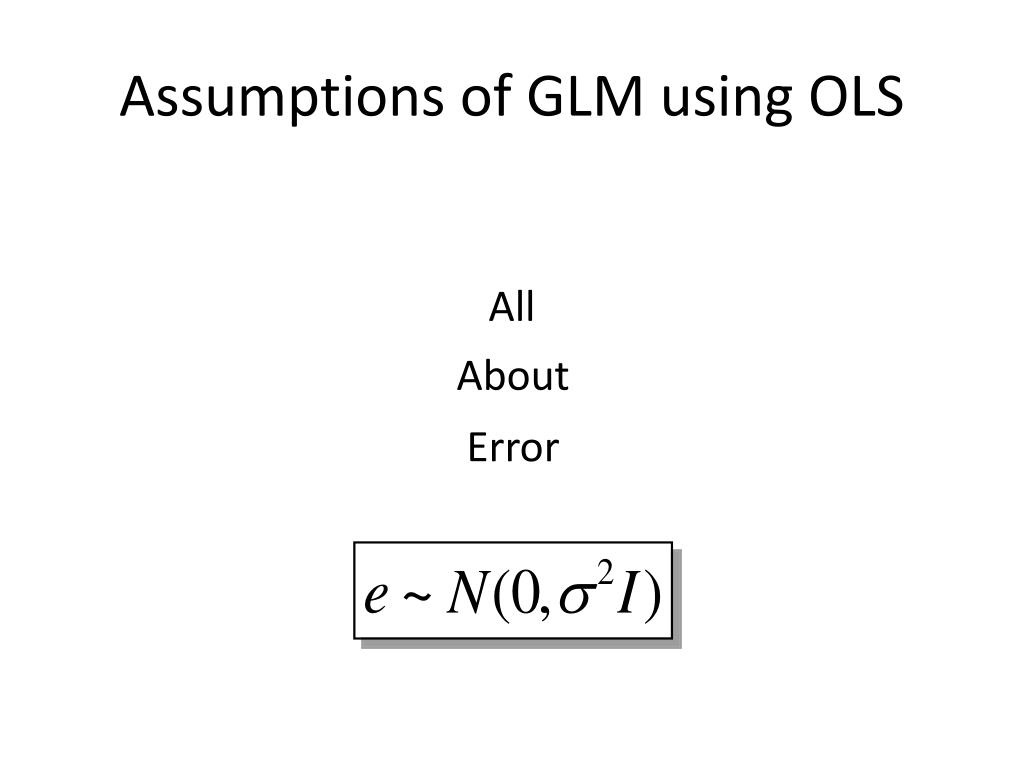

In order to give an idea of how they differentiate from each other, the ols and glm fitted models which are. Under this framework, the distribution of the ols error terms is. What types of problems make gmm more.

Understanding the difference between glm and linear regression is essential for accurate model selection, tailored to data types and research questions. Glm fits models of the form g(y) = xb + e, where the function g() and the sampling distribution of e need to be. All the combinations of predictor variables glm outperforms ols.

It automatically gives standardized regression coefficients. I'm trying to justify using of glm model in my project instead of a simple linear regression. The main benefit of glm over logistic regression is overfitting avoidance.

The normal linear model is a special case of glm, while ols is a distribution free algorithm to find its solution. The glm really is diferent than ols, even with a normally distributed dependent variable, when the link function g is not the identity. Y = xb + e where e~normal ( 0, s 2 ).

If you use lm() or glm() to fit a linear regression model, they will produce the exact same results.

+reserving+models.jpg)